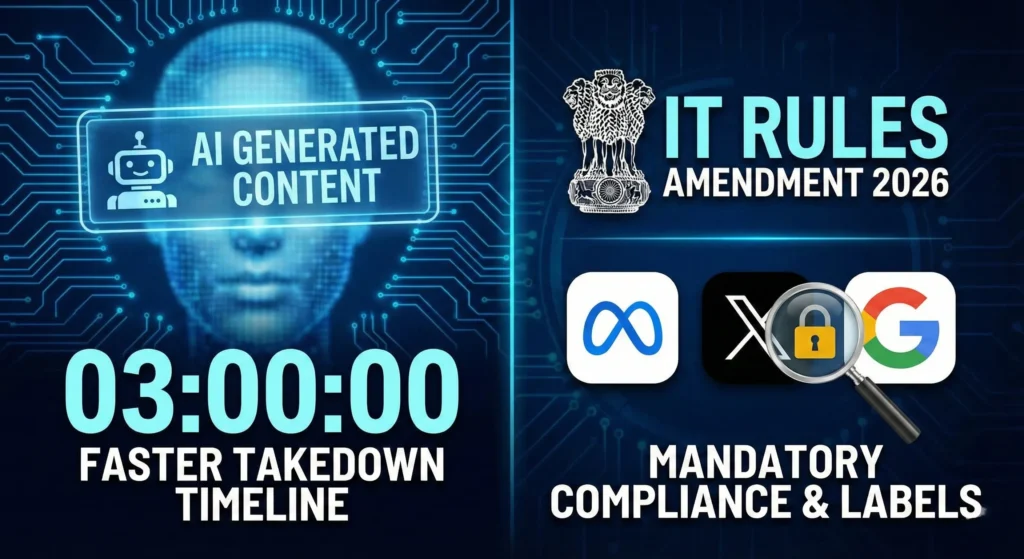

The Indian government has officially tightened the regulatory framework surrounding Artificial Intelligence (AI) and synthetic media, signaling a zero-tolerance approach toward deepfakes and misinformation. On February 10, the Ministry of Electronics and Information Technology (MeitY) notified amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2026, explicitly bringing “synthetically generated information” under the purview of strict compliance.

The new rules, which come into force on February 20, 2026, mandate that all social media intermediaries—including platforms like Meta, X (formerly Twitter), and Google—must ensure that any content created, modified, or altered using AI is prominently labeled. The notification defines “synthetically generated information” as audio, visual, or audio-visual content that appears authentic but has been artificially created or modified by computer resources.

Key Mandates for Platforms:

- Mandatory Labeling: Platforms must deploy technical measures to identify AI content. Users uploading such content will be required to declare it, and the platform must display a clear, visible label indicating it is “synthetically generated.”

- Metadata Tracing: Intermediaries are now required to embed permanent metadata or unique identifiers to trace the origin of the computer resource used to generate or modify the content. Crucially, platforms are prohibited from removing this metadata.

- Faster Takedown Timelines: In a significant shift, the amendment slashes the response time for grievance redressal. Intermediaries must now act on lawful orders to remove specific content within three hours, a sharp reduction from the previous 36-hour window. For user grievances, the response period has been cut from 15 days to just seven days.

The government has clarified that these rules target malicious deepfakes and misinformation, while routine editing or “good faith” creation of educational materials will be exempt. However, the rules explicitly prohibit AI content that depicts child sexual abuse, non-consensual intimate imagery, or misleading depictions of real events.

Failure to comply with these enhanced due diligence requirements will strip platforms of their “safe harbor” protection, making them legally liable for third-party content hosted on their sites. This move is seen as a critical step in safeguarding the integrity of India’s digital ecosystem amidst the rapid proliferation of generative AI tools.